Robots can now learn like humans, using self-awareness

Robots are learning in a way that was once thought to be exclusive to humans – by watching themselves.

A recent study shows that robots can use simple video footage to understand their own structure and movement. This ability allows them to adjust their actions and even recover from damage without human any intervention.

Researchers at Columbia University have developed a method that enables robots to achieve a deeper understanding of their own bodies.

Instead of relying on engineers to create detailed simulations, these robots watch their own movements, using a regular camera, and teach themselves how their bodies work.

By developing “self-awareness,” these machines could revolutionize automation, making robots more independent, adaptable, and efficient in real-world environments like homes, factories, and disaster zones.

Learning from observation

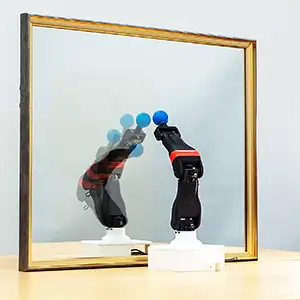

“Like humans learning to dance by watching their mirror reflection, robots now use raw video to build kinematic self-awareness,” explained study lead author Yuhang Hu, a doctoral student at the Creative Machines Lab at Columbia University.

“Our goal is a robot that understands its own body, adapts to damage, and learns new skills without constant human programming.”

Traditionally, robots are first trained in digital simulations. These simulations help the robots understand movement before they are deployed in the real world.

“The better and more realistic the simulator, the easier it is for the robot to make the leap from simulation into reality,” said Professor Hod Lipson, chair of the Department of Mechanical Engineering.

However, designing these simulations is a time-consuming and complex task that often requires expert knowledge.

The researchers found a way to bypass this step by allowing robots to build their own simulations based on input from watching themselves move.

Self-awareness in robots

The study’s breakthrough came from using deep neural networks to infer 3D movement from 2D video.

This means the robot can understand its own shape and how it moves, without needing sophisticated sensors.

More importantly, it can detect changes in its body – such as a bent arm – and adjust its actions accordingly. This kind of adaptability has real-world benefits.

“Imagine a robot vacuum or a personal assistant bot that notices its arm is bent after bumping into furniture,” said Hu.

“Instead of breaking down or needing repair, it watches itself, adjusts how it moves, and keeps working. This could make home robots more reliable – no constant reprogramming required.”

In industrial settings, this capability could be just as valuable. For example, a robot arm in a car factory could get knocked out of alignment and need to be readjusted.

“Instead of halting production, it could watch itself, tweak its movements, and get back to welding, which would cut downtime and costs. This adaptability could make manufacturing more resilient,” noted Hu.

Why robots need self-awareness

As robots become more integrated into everyday life, their ability to operate independently will be essential.

“We humans cannot afford to constantly baby these robots, repair broken parts and adjust performance. Robots need to learn to take care of themselves if they are going to become truly useful,” said Professor Lipson. “That’s why self-modeling is so important.”

This study builds on two decades of research at Columbia University. During this time, the researchers have been developing ways for robots to create self-models using cameras and other sensors.

In 2006, their robots could only generate simple stick-figure-like simulations. A decade later, they advanced to higher-fidelity models using multiple cameras.

The future of self-sufficient robots

Now, for the first time, a robot has managed to build a complete kinematic model of itself using just a short video clip from a single standard camera – input that is similar to looking in a mirror.

“We humans are intuitively aware of our body; we can imagine ourselves in the future and visualize the consequences of our actions well before we perform those actions in reality,” explained Professor Lipson.

“Ultimately, we would like to imbue robots with a similar ability to imagine themselves, because once you can imagine yourself in the future, there is no limit to what you can do.”

The ability of robots to self-model and adapt is a significant step forward. It could lead to more resilient machines that require less human oversight, and pave the way for smarter, more independent robots in homes, factories, and beyond.

—–

Watch the robot learn by watching itself in the mirror by clicking here…

The full study was published in the journal Nature Machine Intelligence.

—–

Like what you read? Subscribe to our newsletter for engaging articles, exclusive content, and the latest updates.

Check us out on EarthSnap, a free app brought to you by Eric Ralls and Earth.com.

—–